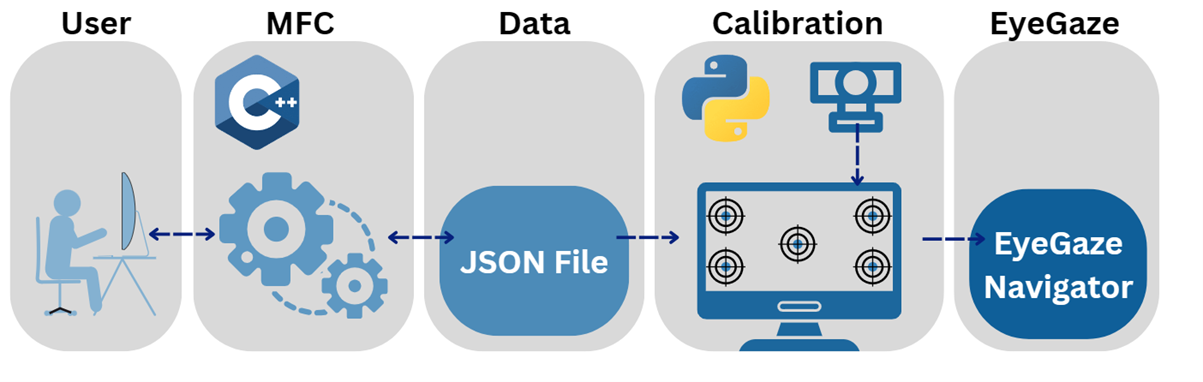

EyeGaze System Architecture

EyeGaze system architecture is designed so that a user has to initially interact with an MFC which then communicates back to the Eyegaze Json file. From there establishes the settings of calibration based on the file. After calibration is complete, the user has control over their computer system.

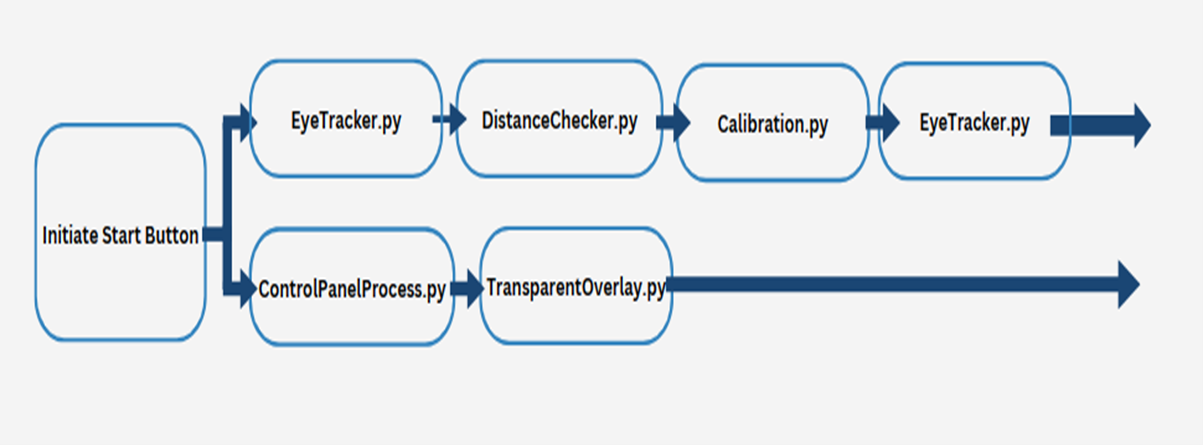

Sequence Diagrams

The diagram below iilustrates the sequence in which our files are executed.

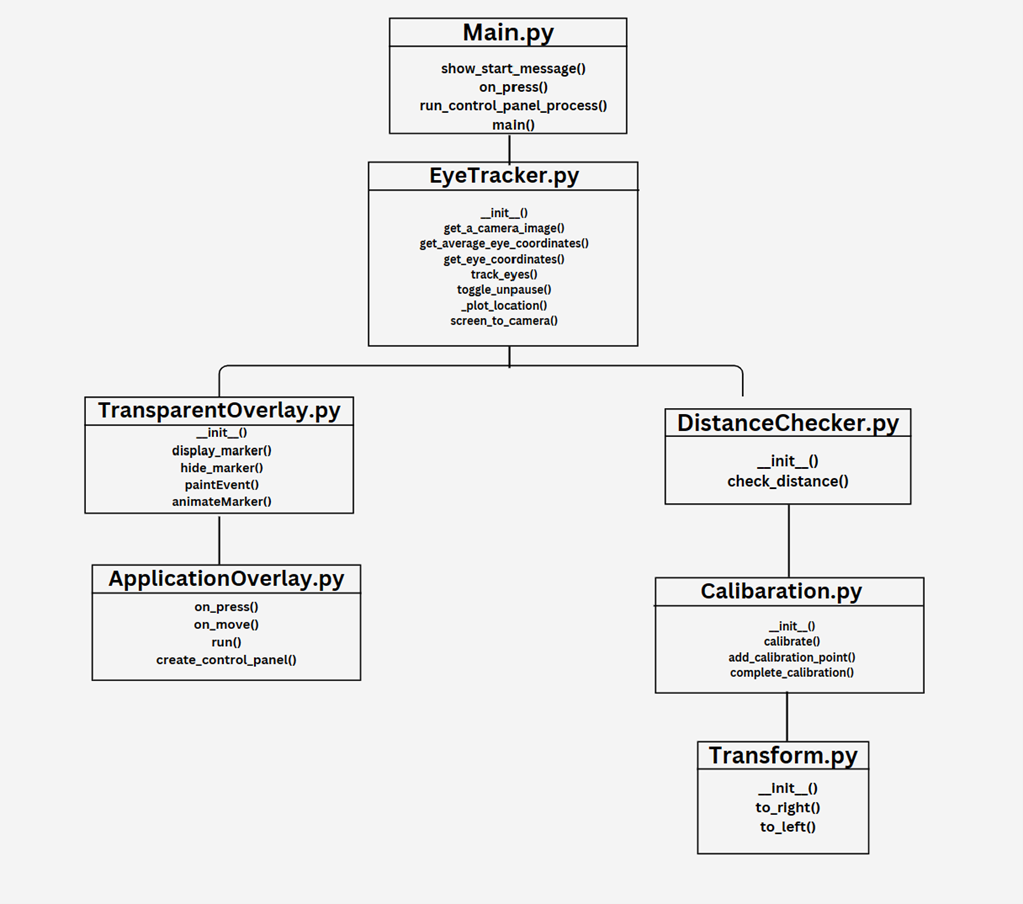

Class diagrams

Below is a breakdown of each class and their methods, a visul representation of this can be seen at the end of the section.

Main.py

The Main.py script orchestrates the application's workflow. It contains key functions such as:

- show_start_message(): Displays an initial message or greeting to the user upon application startup.

- on_press(): Defines actions to be taken when specific buttons are pressed.

- run_control_panel_process(): Initiates the control panel process which allows for user interaction with the application's settings.

- main(): This is the central method that calls other initial setup functions and enters the main event loop of the application.

EyeTracker.py

EyeTracker.py is dedicated to tracking and interpreting eye movements using the following methods:

- __init__(): Constructor to initialize the eye tracker with necessary configuration.

- get_a_camera_image(): Captures a single frame from the camera for processing.

- get_average_eye_coordinates(): Computes the average position of the eyes over multiple frames to improve accuracy.

- get_eye_coordinates(): Retrieves the current coordinates of the user's gaze.

- track_eyes(): The continuous loop that captures camera frames and determines the gaze position.

- toggle_unpause(): Allows the tracking process to be paused or resumed, useful for calibration or when user interaction is required.

- plot_location(): Visualizes the detected gaze point on the user's screen.

- screen_to_camera(): Translates screen coordinates to the camera's coordinate system, necessary for aligning the gaze with elements on the screen.

TransparentOverlay.py

This module controls a transparent GUI overlay with methods such as:

- __init__(): Sets up the overlay with any initial configurations.

- display_marker(): Places visual markers on the overlay, used to indicate gaze position or for interactive elements.

- hide_marker(): Removes visual markers from the overlay.

- paintEvent(): A Qt-specific event handler that is called when the overlay needs to be repainted, such as when markers are moved or animated.

- animateMarker(): Adds animation to the markers, providing visual feedback or cues to the user.

ApplicationOverlay.py

ApplicationOverlay.py is responsible for handling the interactive portion of the application's overlay through methods like:

- on_press(): Responds to press events within the control panel.

- on_move(): Detects and handles cursor movements within the overlay area.

- run(): Contains the logic to run the overlay, typically by entering an event loop.

- create_control_panel(): Sets up a control panel which can include buttons, sliders, and other interactive elements for user settings.

DistanceChecker.py

This utility module ensures the user is at the correct distance for eye tracking to function properly:

- __init__(): Initializes any variables or settings required for distance checking.

- check_distance(): Regularly measures the distance of the user from the camera and alerts if the distance is outside the operational range.

Packages and APIs Defined

The various APIs and packages utilized in our eye-tracking software and their specific purposes.

- keyboard (0.13.5): Utilized for reading and controlling the keyboard. Facilitates keyboard shortcuts for actions like pausing or resuming tracking.

- MediaPipe (0.10.9): Provides real-time face and eye detection capabilities, crucial for tracking the user's gaze with minimal latency.

- NumPy (1.24.3): Supports large, multi-dimensional arrays and matrices, essential for numerical analyses related to gaze point calculations and screen coordinate transformations.

- opencv_contrib_python (4.9.0.80): Essential for image processing tasks, including capturing webcam video, detecting eyes, and interpreting gaze direction.

- PyAutoGUI (0.9.54): Allows for programmatically controlling the mouse and keyboard based on the user's gaze, providing a hands-free interface.

- pynput (1.7.6): Complements keyboard controls by offering detailed control and monitoring of input devices for complex interactions.

- pywin32 (305.1): Provides access to Windows APIs for tasks like interfacing with the OS to manage application windows or access system functionalities.

- PyWinCtl (0.3): Enables controlling windows on Windows, useful for adjusting application windows based on user gaze or creating immersive experiences.

- screeninfo (0.8.1): Fetches information about the screen, vital for accurately mapping gaze points to screen locations.

- PyQt5 (5.15.7): Used for developing the graphical user interface, allowing users to interact with the software through a visually appealing and intuitive interface.